Programmers spend countless hours interacting with one fundamental tool: their text editor. Before you commit, push, or ship a single line of code, you must first put your hands on the keyboard and type it into the machine. No tool has a bigger impact on the visceral and tactile experience of creating software.

Yet despite the critical role that editors play in my life as a programmer, I have never found an editor that I truly love. So 16 years ago, I decided to build one. It took a failed attempt, many hard lessons, and the help of some talented friends, but the tool I've been striving to create is finally emerging in the 135k lines of Rust that constitute Zed.

Our first goal with Zed is simple: to build an editor that we love using. A tool with consistent high performance. A tool that assists us, but also stays out of our way. A tool that looks great, but also disappears. We want Zed to advance the state of the art for text editing, combining the strengths of other editors while avoiding their weaknesses, then going further. Anything less isn't worth building.

But beyond executing on the fundamentals, we also see an opportunity to radically improve the way developers collaborate on software. By integrating collaboration as a first-class concern of the code authoring environment itself, Zed will make it easier to link conversations to any piece of text, regardless of whether it was committed last year or just written moments ago. Zed will also make it seamless to write and discuss code with fellow developers in real time.

This focus on collaboration is one of Zed's defining characteristics, so with our first blog post, we'd like to explore the technology we've built into the core of the editor to make it possible.

The pre-history of collaborative editing

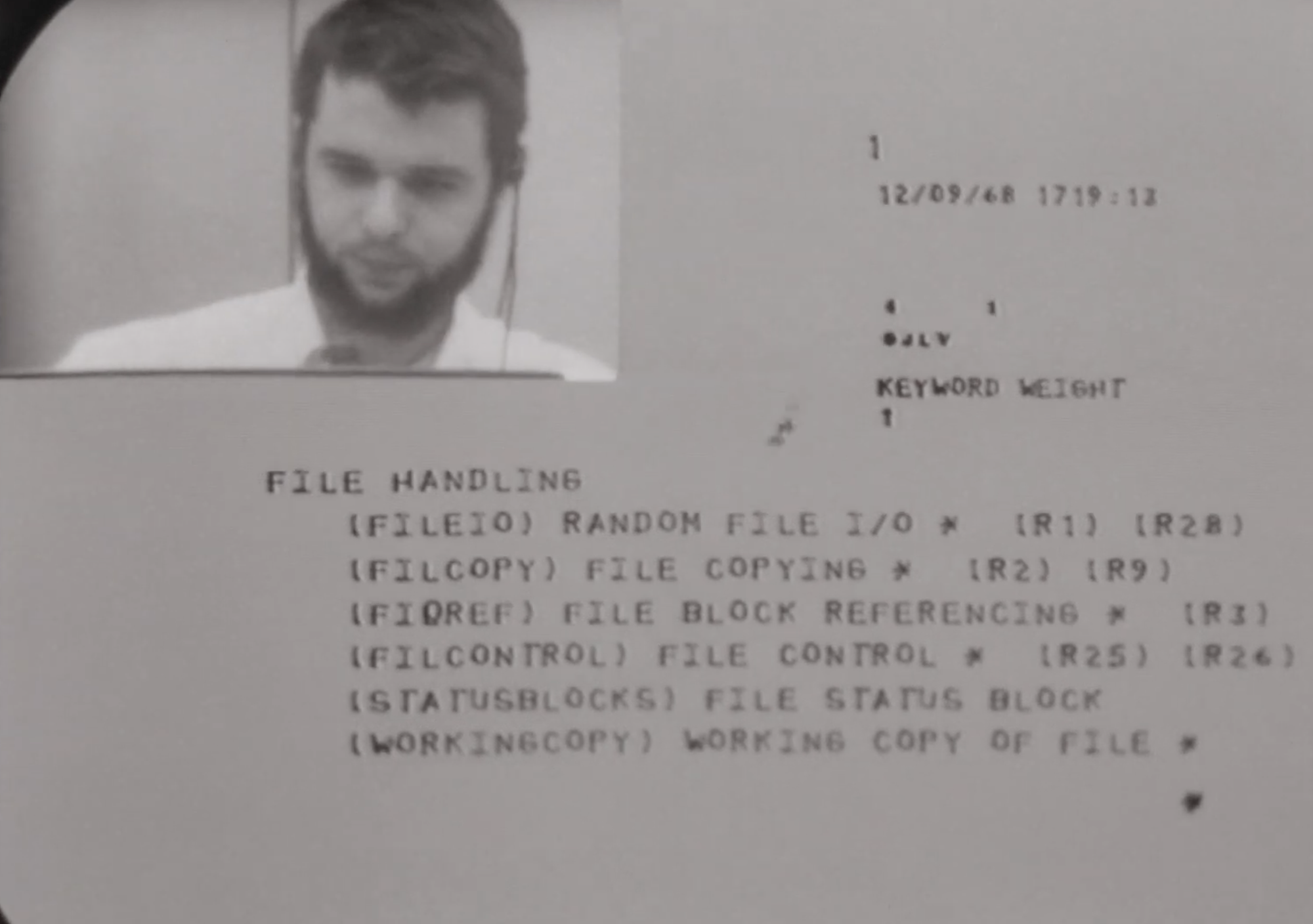

In December of 1968, Douglas Engelbart demonstrated a host of technologies in front of a standing-room-only audience in San Francisco, including interactive editing, hypertext, and the mouse. The ideas he presented went on to shape modern computing, but when I first watched his famous demo, I was surprised to learn that the system that amazed everyone was actually a collaborative text editor. The very thing I had been trying to build! In 1968.

To build their collaborative editor, Engelbart's team needed to create their own programming language, time-sharing operating system, and cathode ray tube displays. As we set out to build Zed, our task was obviously vastly easier than theirs in almost every way, but we did face one problem that they did not: asynchronous coordination.

In Engelbart's system, collaborators were all connected to the same physical machine via individual terminals. I'm unsure whether their tool ever supported fine-grained concurrent editing, but at least in theory, it would have been possible in this setup to synchronize edits to a shared buffer with a mutex. But this isn't how computers are organized today. Instead of sharing a single machine via directly-connected terminals, we use personal computers that are connected via the internet. And we collaborate over much greater distances. Even at the speed of light, synchronizing access to a shared buffer between two different continents would introduce prohibitive editing latency.

The challenge of asynchronous coordination

To collaborate over the internet, we need an approach that allows individuals to edit their own replicas of a document independently and have their documents converge to the same contents after they exchange data asynchronously. It turns out this is a hard problem.

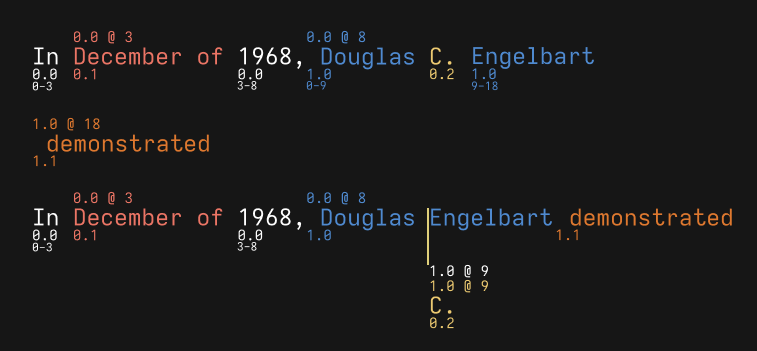

The animation below illustrates the basic challenge. We start with two replicas of the text In 1968,. We then concurrently insert different text into each replica and transmit a description of our edits to the other replica. But if we naively apply a remote edit without accounting for concurrent changes, we can end up applying it to an invalid location, causing the contents of the replicas to diverge.

Eventually-consistent text editing with CRDTs

One solution is to somehow transform incoming edits to reflect concurrent changes. In the animation below, you can see how we transform the blue insertion, changing its position from 8 to 20.

This is simple in concept, but defining a correct and performant function that can transform operations is non-trivial, and was the subject of a whole subdiscipline of computer science research known as Operational Transformation, or OT. We experimented with this approach when we first explored collaborative editing back in 2017, but we ultimately chose to work with an alternative theoretical framework called Conflict-Free Replicated Data Types (CRDTs), which we found to be more powerful and intuitive.

With CRDTs, instead of transforming concurrent operations so they can be applied in a different order, we structure our data so that concurrent operations are inherently commutative, allowing us to apply them directly on any replica without transformation. But how do we make text edits commutative?

The key is to express edits in terms of logical locations rather than absolute offsets. In the examples above, what if instead of referring to insertion locations in terms of numeric offsets, we described them via content instead? Then it wouldn't matter that concurrent edits have shifted the text, because we only depend on content to resolve the location of the remote edit.

This approach obviously wouldn't work in practice. The text 68, might appear multiple times, or a concurrent edit may have completely deleted it. To use this sort of content-based logical addressing, we need to do it in a way that's durable in the presence of concurrent changes. But how?

Stable references in unstable text

The problem with expressing logical positions in terms of the buffer's current content is that the text isn't stable. But one thing that is stable is the editing history. We can treat every piece of text that's ever been inserted as immutable. Subsequent edits might split that text apart or delete portions of it, but this doesn't change the text that was originally inserted. If we assign a unique identifier to every insertion, we can now unambiguously refer to a logical location using this identifier combined with an offset into the inserted text. We refer to these (insertion id, offset) pairs as anchors.

To generate these unique identifiers, we centrally assign each replica a unique id when it's created, then combine it with an incrementing sequence number. By inheriting uniqueness from the replica id, replicas can generate ids concurrently without risk of collision.

At the start of a collaboration session, participants are assigned replica ids. Replica 0 assigns an identifier of 0.0 to the buffer's initial text, then transmits a copy to replica 1. This initial fragment of text, 0.0, is the first insertion, and it will remain immutable for the life of the buffer.

Now each participant inserts text concurrently, describing the insertion location with offsets relative to insertion 0.0. Each new insertion is assigned its own unique id. When replica 0 inserts December of within insertion 0.0 at offset 3, the fragment labeled 0.0 is split into two pieces. Replica 1 appends Douglas Engelbart at the end of insertion 0.0, at offset 8. Both participants also transmit their operations to the other party.

Now the replicas apply each other's operations. First, replica 1 incorporates the red insertion with id 0.1, splitting insertion 0.0 in two just as occurred when replica 0 originally inserted this text. Then replica 0 incorporates the blue insertion with id 1.0.

It scans through its fragments, searching for offset 8 of insertion 0.0. The first fragment belongs to 0.0, but it's only 3 characters long. The second fragment belongs to a different insertion, 0.1, and is skipped. Finally, we reach the second fragment containing text from insertion 0.0. This one contains offset 8, and so we insert the blue text there. The replicas converge.

This process can continue recursively, with insertions building upon each other in a tree. In the animation below, both replicas insert additional text at different offsets within the blue insertion with id 1.0. To apply the remote operations, we again scan through the document looking for the fragment of insertion 1.0 that contains the specified offset.

In these examples, we're inserting multiple characters at a time, but it's worth noting that in practice, collaborators are often inserting individual characters rather than pasting whole words from their clipboard. Tracking all of this metadata per-character may seem like a lot of overhead, but in practice it isn't an issue on modern computing hardware. Even long edit histories barely compare to the memory savings Zed obtains from not being built with Electron.

You may also be asking: Isn't scanning through the entire document like this to apply every remote edit insanely slow? In a future post, I'll explain how we use a copy-on-write B-tree to index these fragments in order to avoid linear scans, but this simplified explanation should give you a basic framework to understand how collaborative editing works in Zed.

Deletion

If every insertion is immutable, how do we remove text from the document when a user deletes? Rather than mutating inserted text, we instead mark deleted fragments with tombstones. Fragments with tombstones are hidden in the text we display for the user, but they can still be used to resolve logical anchors to concrete locations in the document.

In the animation below, we insert text in replica 1 at a position that is concurrently deleted in replica 0. Because the deleted text is merely hidden rather than actually thrown away, we can still apply the insertion when it arrives at replica 0.

If deletions only encode a range, divergence can occur if text is concurrently inserted inside the deleted range. In the example below, note how the yellow C. is visible in replica 0 but hidden in replica 1.

To avoid this issue, we also associate deletions with a vector timestamp that encodes the latest observed sequence number for each replica. Using this, we can exclude insertions that occurred concurrently, only hiding text that was actually visible to the user performing the deletion.

The animation below is much like the one above, except this time we augment the deletion operation with a version vector. When we apply the deletion on replica 1, we exclude the yellow insertion because its id contains a sequence number that isn't included in the deletion's version. This causes the yellow insertion to remain visible on both replicas, preserving the deleting user's intent.

Like insertions, deletions are associated with unique identifiers, which we record on the tombstone. We'll see how these deletion identifiers are used later when we discuss undo and redo operations.

Concurrent insertions at the same location

When concurrent insertions occur at the same location, it doesn't matter how we order the insertions, but it definitely does matter that their ordering is consistent across all replicas. One way to achieve consistency is to order all insertions at the same location by their id.

However, the problem with this approach is that it can become impossible for certain replicas to insert text prior to an insertion they have already observed.

We need a consistent ordering of these insertions that respects causality. Our solution is to augment insertions with Lamport timestamps. These logical timestamps are derived from a scalar-valued Lamport clock that is maintained on every replica. Whenever a replica generates an operation, it derives a Lamport timestamp by incrementing its Lamport clock. Whenever a replica receives an operation, it sets its own Lamport clock to the greater of the clock's current value and the timestamp of the incoming operation.

This scheme guarantees that if an operation was present at the time of another operation, then it will have a lower timestamp. Another way of phrasing this is that the Lamport timestamp allows us to sort the operations in causal order. The inverse isn't true. Just because operation A has a lower Lamport timestamp than operation B, it doesn't necessarily mean that it causally preceded operation B, because we have no guarantees about the relationship between the Lamport timestamps of concurrent operations. But we've already established that we don't care how concurrent insertions are ordered, so long as our ordering is consistent.

By sorting insertions descending by their Lamport timestamp and breaking any ties based on their replica id, we achieve a consistent ordering scheme that respects causality.

Undo and redo

In non-collaborative systems, the undo and redo history can be represented as stacks of simple edit operations. When you want to undo something, you simply pop the edit on the top of the undo stack, apply its inverse to the current text, and push it to the redo stack. But this only allows for a single global undo history for the entire document. The offset of any operation in the history is only valid for the specific state of the document in which that operation was originally applied. This means that operations must always be undone in the exact reverse order in which they occurred.

But when collaborative editing, a single undo history for the entire buffer doesn't work. When you undo, you expect to undo text that you yourself typed. Each participant needs their own undo stack. This means we need to be capable of undoing and redoing operations in an arbitrary order. A shared global stack of edit operations isn't enough.

Instead, we maintain an undo map, which associates operation ids with a count. If the count is zero, the operation has not been undone. If the count is odd, the operation has been undone. If it's even, the operation has been redone. Undo and redo operations simply update counts in this map for specific operation ids. Then, when we're deciding whether a certain fragment is visible, we first check if that insertion has been undone (its undo count is odd). We then check if it has any deletion tombstones, and whether the undo count of any of those deletions is even.

When sending undo/redo operations, it's fine to assign these undo counts directly. If two users both undo the same operation concurrently, they'll end up setting its undo count to the same value. This preserves their intent, since they both wanted to undo or redo it. In practice, we currently only allow users to undo their own operations, but we may eventually introduce the ability to undo operations of collaborators.

Conclusion

There's obviously a lot more to cover. How do we actually implement this scheme so that it's efficient? How do we integrate CRDTs into a broader system that creates the illusion of a shared workspace? How do we make this complex distributed system reliable? And what else can we do with CRDTs other than collaborate? Then there's the rest of the editor. Ropes. Our GPU-accelerated UI framework. Tree-sitter. The integrated terminal. And much, much more.

We're looking forward to talking about all of it in the months and years to come. And more importantly, we're looking forward to applying this technology to ship an editor that makes you happier and more productive. Thanks for reading!